High Performance Computing (HPC)

What is High Performance Computing

High performance computing (HPC) has gained importance in many scientific fields in recent years. HPC is seen as a key technology in science and industry. While numerical simulations like in the natural sciences and in engineering have a long HPC history, the importance of big data analyses and computationally intensive applications surrounding Artifical Intelligence are gaining more and more importance.

HPC makes it possible to answer complex scientific questions and to gain insights into previously unexplored areas and systems. Extremely demanding computational applications, which are no longer feasible on normal computers due to complexity or scale, fall into the realm of high-performance computing. In principle, HPC systems provide many times the computing power and storage capacity of conventional desktop and simple server systems. Scientific computing, an interdisciplinary approach to developing models, algorithms and software, also plays an important role.

High-performance computers capable of processing huge amounts of data and implementing complex calculations are used in many different research fields, some of which will be discussed in the following paragraphs.

Possible Applications of HPC in Science

Climate Research

In climate research, high-performance computers are used to more accurately predict weather, natural disasters and for climate change simulations. Climate researchers also simulate the behavior of the oceans and the atmosphere. This allows them to more accurately model and understand climate change.

Biomedical Sciences

In biomedical research, teams are using high-performance computing to study biomolecules and proteins in human cells to develop new procedures, drugs and medical therapies to improve our quality of life. In brain research, HPC is used to model and simulate the human brain at high resolutions.

Agricultural Science

HPC technology is also used for the development of more sustainable agriculture, for example for optimization in food production and the analysis of sustainability factors. It is also used for studies on the efficient management of water resources and agricultural resources.

Energy Science

In the field of energy science, researchers are working on the development of systems for generating energy from renewable energy sources, on investigations into new materials for solar cells, or on the optimization of turbines for electricity generation using powerful high-performance computers.

Urban Planning

Similarly, HPC has made its way into urban planning, where questions concerning the efficient control of traffic infrastructure have to be solved. Simulation on high-performance computers is used to study traffic flows while taking into account the dispersion of pollutants. Moreover, air flows in major cities can be calculated using HPC technology.

However, a high-performance computer is much more than just a large and fast computer. It requires infrastructures for power supply, cooling, computer rooms and intelligent security concepts as well as HPC experts who operate these systems. High-performance computing means innovative methods for modeling, simulation, optimization of applications and processes.

Advantages and Disadvantages of HPC

Of Nodes and Cores

The architecture of high-performance computers is geared towards parallel processing in order to quickly process the very high computing workload, which is why they are also referred to as parallel computers. Linux is used as the operating system, since it offers the broadest support in the HPC field.

In simple terms, a high-performance computer consists of a large number of coupled, parallel computers, the so-called compute nodes. These in turn contain a certain number of cores (CPU-Central Processing Unit, the processor) and GPUs (Graphics Processor Unit, a graphics processor). The computing power of high-performance computers is expressed in teraflops (TFLOPS) and petaflops (PFLOPS).

For example, the "HAWK" supercomputer at the Stuttgart supercomputing center has 26 petaflops and more than 5,500 nodes with 720,000 cores. This makes it one of the most powerful computers in Europe, as is also explained in this virtual tour.

A second example: the research supercomputer Justus II in Baden-Württemberg with its 33,696 cores is roughly equal to a system of about 15,000 conventional laptops. When a particularly large number of processors can access shared peripherals and a partially shared main memory, the term supercomputer is also used. To get an idea of the most powerful supercomputers in the world, it is worth taking a look at the Top500.

Parallel computers are designed with the aim of breaking down extremely demanding computing tasks, also known as jobs, into individual subtasks. A job management system distributes them to the nodes, where they are executed simultaneously. Task distribution and synchronization are important aspects for computing performance. Communication between running job parts running on different nodes is usually done by means of a message passing interface (MPI). To achieve fast communication between the individual processes, the nodes are connected by an extremely fast high-speed network, e.g. an Infiniband.

Computer performance is increased by a parallel distributed file system, where each node can access the file system directly. The file system is located on a storage medium that is directly accessible by all nodes. High-performance computers can be used by several users simultaneously. However, they then compete for nodes, storage, networks, etc. in completing submitted jobs. To fairly and efficiently utilize an HPC cluster, an HPC resource manager, also called a workload manager, is used. It can automatically allocate computing resources according to schedule and also performs management, monitoring and reporting tasks. The high-performance computers do not provide backup storage for longer retention, as this would severely limit computing power. Connected storage infrastructures are used for this purpose.

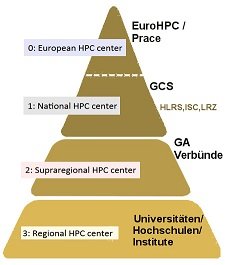

HPC Performance Pyramid

Levels 0 to 3 (it is also referred to as Tier - German: Stufe, Schicht -) reflect the increasing computing capacity and the increasing degree of parallelization of the simulation codes and the amount of data processed. At base level 3, there is a broader range of HPC computing systems for researchers and young scientists, who also receive increased support here.

Overview of Selected HPC Structures and HPC Projects

Prace

To provide international researchers and industry partners with access to computing and data management resources and services for their large-scale scientific and technical applications at the highest performance level, 26 EU members have founded the initiative Prace (Partnership for Advanced Computing in Europe).

EuroHPC JU

At the European level, the Joint Undertaking for European High Performance Computing was established to implement the European High Performance Computing initiative. The EuroHPC is a public-private partnership between the Community, the participating Member States, the European Technology Platform for High Performance Computing (ETP4HPC) and the Big Data Value Association (BDVA). The members of EuroHPC pursue the goal of strengthening high-performance computing in Europe and advancing the development of supercomputers. Based on competitive European technology, a pan-European supercomputing infrastructure in the exascale domain is being developed in order to be able to process huge amounts of data, thus supporting research and innovation activities. More on supercomputers in Europe can be found here.

Gauß Centre for Supercomputing (GCS)

The GCS (Gauss Centre for Supercomputing) is the German partner in the PRACE initiative. The GCS is an association of the three most important supercomputing centers in Germany, the HLRS (High Performance Computing Center of the University of Stuttgart), the JSC (Jülich Supercomputing Centre) and the LRZ (Leibniz Computing Centre, Garching near Munich). The goal of the GCS is to promote and support scientific supercomputing. The continuous development of these three supercomputing centers is ensured by funding from the German Federal Ministry of Research and Education and the state ministries.

Gauß-Allianz (GA)

The Gauss Alliance, a non-profit association for the promotion of science and research, is composed of the major scientific computing centers in Germany as well as other HPC-relevant scientific institutions. It promotes the scientific topic of HPC as an independent strategic research activity and ensures improved international visibility of German research efforts in this field. The focus is on researching and developing strategies to improve the efficiency, applicability and enhanced usability of high-performance and supercomputing.

Network for National High Performance Computing (NHR)

In 2018, the Joint Science Conference (GWK) of the federal and state governments agreed to permanently establish National High Performance Computing (NHR) capabilities. National High Performance Computing capabilities will further develop the technical and methodological strengths of high-performance computing centers in a national network.

With the GWK decision of November 13, 2020, eight 2nd tier computing centers in the performance pyramid were included in the funding of the NHR. This was preceded by a recommendation from the Strategy Committee based on a competitive and science-driven selection process.

German Climate Computing Center (DKRZ)

The German Climate Computing Center (www.dkrz.de) is a national service facility and an important partner in climate research. Its high-performance computers, data storage and services form the central research infrastructure for simulation-based climate science in Germany.

North German Network of High Performance and Supercomputing

Baden-Württemberg bwHPC and its User Support

In Baden-Württemberg, as part of an implementation concept, a state-wide high-performance computing infrastructure has been established through the bwHPC (High Performance Computing in Baden-Württemberg) initiative and will be expanded in the future with additional storage infrastructures. The initiative’s special feature, which is also known as bwHPC-S5 (Scientific Simulation and Storage Support Services), is that cross-university user-support measures are also offered in order to open up the resources provided to as broad a scientific user group as possible. A bridge has been built, so to speak, between researchers and IT.

Hessian Competence Center for High Performance Computing (HKHLR)

The HKHLR, to which the five Hessian universities of Darmstadt, Frankfurt, Giessen, Kassel and Marburg belong, is a component of the Hessian HPC ecosystem. Its core task is to support Hessian scientists in the efficient use of high-performance computing.

Competence Network for Scientific High Performance Computing in Bavaria (KONWIHR)

The main goal of KONWIHR is to provide technical support for the use of high-performance computers and to expand their deployment potential throughout research and development projects. Close cooperation between disciplines, users and participating computing centers as well as efficient transfer and fast application of results are important.

North Rhine-Westphalia Competence Center for High Performance Computing (HPC.NRW)

The state of North Rhine-Westphalia is home to a diverse ecosystem of high-performance computing facilities at almost all university locations. Against this background, the HPC working group of the Digital University NRW (HPC.NRW) has developed an HPC state concept with the aim of further developing the HPC ecosystem in North Rhine-Westphalia within the national HPC context.

Alliance for High Performance Computing Rhineland-Palatinate (AHRP)

The Alliance AHRP is a joint institution of the University of Mainz and the TU Kaiserslautern. Its goal is to coordinate the two universities’ high-performance computing activities in the state of Rhineland-Palatinate on a sustainable basis. In addition to the training program, a joint workshop with the Hessian Competence Center for High Performance Computing is offered annually.

Vienna Scientific Cluster (VSC)

The Vienna Scientific Cluster is an association of several Austrian universities that provides its users with access to a supercomputer. The current flagship of the VSC, VSC-5, has an aggregated peak performance of 4.3 PetaFLOPS and is significantly more energy-efficient than its predecessor.